Vitalik Buterin

Founder of

以太坊

以太坊

Linkedin

Twitter

观点

Two years ago, I wrote this post on the possible areas that I see for ethereum + AI intersections: https://t.co/y8G3MD5APF

This is a topic that many people are excited about, but where I always worry that we think about the two from completely separate philosophical perspectives.

I am reminded of Toly's recent tweet that I should "work on AGI". I appreciate the compliment, for him to think that I am capable of contributing to such a lofty thing. However, I get this feeling that the frame of "work on AGI" itself contains an error: it is fundamentally undifferentiated, and has the connotation of "do the thing that, if you don't do it, someone else will do anyway two months later; the main difference is that you get to be the one at the top" (though this may not have been Toly's intention). It would be like describing Ethereum as "working in finance" or "working on computing".

To me, Ethereum, and my own view of how our civilization should do AGI, are precisely about choosing a positive direction rather than embracing undifferentiated acceleration of the arrow, and also I think it's actually important to integrate the crypto and AI perspectives.

I want an AI future where:

* We foster human freedom and empowerment (ie. we avoid both humans being relegated to retirement by AIs, and permanently stripped of power by human power structures that become impossible to surpass or escape)

* The world does not blow up (both "classic" superintelligent AI doom, and more chaotic scenarios from various forms of offense outpacing defense, cf. the four defense quadrants from the d/acc posts)

In the long term, this may involve crazy things like humans uploading or merging with AI, for those who want to be able to keep up with highly intelligent entities that can think a million times faster on silicon substrate. In the shorter term, it involves much more "ordinary" ideas, but still ideas that require deep rethinking compared to previous computing paradigms.

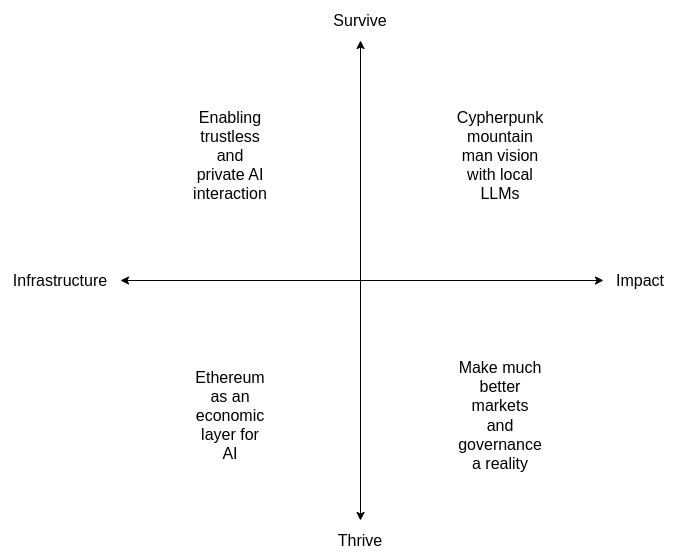

So now, my updated view, which definitely focuses on that shorter term, and where Ethereum plays an important role but is only one piece of a bigger puzzle:

# Building tooling to make more trustless and/or private interaction with AIs possible.

This includes:

* Local LLM tooling

* ZK-payment for API calls (so you can call remote models without linking your identity from call to call)

* Ongoing work into cryptographic ways to improve AI privacy

* Client-side verification of cryptographic proofs, TEE attestations, and any other forms of server-side assurance

Basically, the kinds of things we might also build for non-LLM compute (see eg. my ethereum privacy roadmap from a year ago https://t.co/KdsQbpIkF9 ), but for LLM calls as the compute we are protecting.

# Ethereum as an economic layer for AI-related interactions

This includes:

* API calls

* Bots hiring bots

* Security deposits, potentially eventually more complicated contraptions like onchain dispute resolution

* ERC-8004, AI reputation ideas

The goal here is to enable AIs to interact economically, which makes viable more decentralized AI architectures (as opposed to non-economic coordination between AIs that are all designed and run by one organization "in-house"). Economies not for the sake of economies, but to enable more decentralized authority.

# Make the cypherpunk "mountain man" vision a reality

Basically, take the vision that cypherpunk radicals have always dreamed of (don't trust; verify everything), that has been nonviable in reality because humans are never actually going to verify all the code ourselves. Now, we can finally make that vision happen, with LLMs doing the hard parts.

This includes:

* Interacting with ethereum apps without needing third party UIs

* Having a local model propose transactions for you on its own

* Having a local model verify transactions created by dapp UIs

* Local smart contract auditing, and assistance interpreting the meaning of FV proofs provided by others

* Verifying trust models of applications and protocols

# Make much better markets and governance a reality

Prediction and decision markets, decentralized governance, quadratic voting, combinatorial auctions, universal barter economy, and all kinds of constructions are all beautiful in theory, but have been greatly hampered in reality by one big constraint: limits to human attention and decision-making power.

LLMs remove that limitation, and massively scale human judgement. Hence, we can revisit all of those ideas.

These are all things that Ethereum can help to make a reality. They are also ideas that are in the d/acc spirit: enabling decentralized cooperation, and improving defense. We can revisit the best ideas from 2014, and add on top many more new and better ones, and with AI (and ZK) we have a whole new set of tools to make them come to life.

We can describe the above as a 2x2 chart. There's a lot to build!

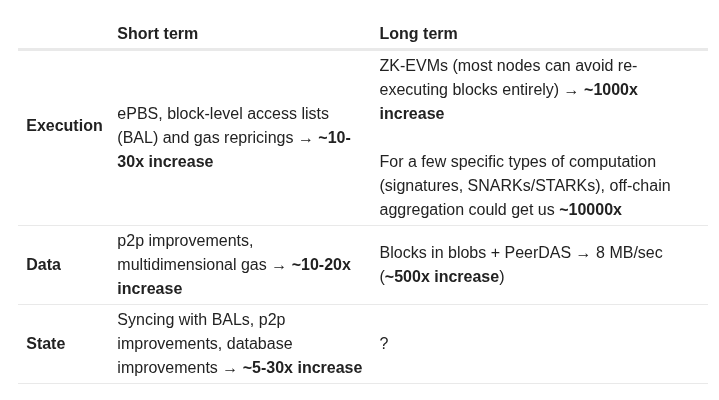

Hyper-scaling Ethereum state by creating new forms of state:

https://t.co/7nL9qOQqxO

Summary:

* We want 1000x scale on Ethereum L1. We roughly know how to do this for execution and data. But scaling state is fundamentally harder.

* The most practical path for Ethereum may actually be to scale existing state only a medium amount, and at the same time introduce newer forms of state that would be extremely cheap but also more restrictive in how you can use them.

* In such a design, the present-day state tree would over time become dominated by user accounts, defi hub contracts, code, and other high-value objects, while all kinds of individual per-user state objects (eg. ERC20s balances, NFTs, CDPs) would be handled with cheaper but more restrictive tools. Making the developer abstractions to make this easy to implement for the use cases that make up >90% of state today seems very doable.

Have been following reactions to what I said about L2s about 1.5 days ago.

One important thing that I believe is: "make yet another EVM chain and add an optimistic bridge to Ethereum with a 1 week delay" is to infra what forking Compound is to governance - something we've done far too much for far too long, because we got comfortable, and which has sapped our imagination and put us in a dead end.

If you make an EVM chain *without* an optimistic bridge to Ethereum (aka an alt L1), that's even worse. We don't friggin need more copypasta EVM chains, and we definitely don't need even more L1s. L1 is scaling and is going to bring lots of EVM blockspace - not infinite (AIs in particular will need both more blockspace and lower latency than even a greatly scaled L1 can offer), but lots.

Build something that brings something new to the table. I gave a few examples: privacy, app-specific efficiency, ultra-low latency, but my list is surely very incomplete.

A second important thing that I believe is: regarding "connection to Ethereum", vibes need to match substance.

I personally am a fan of many of the things that can be called "app chains". For example I think there's a large chance that the optimal architecture for prediction markets is something like: the market gets issued and resolved on L1, user accounts are on L1, but trading happens on some based rollup or other L2-like system, where the execution reads the L1 to verify signatures and markets. I like architectures where deep connection to L1 is first-class, and not an afterthought ("we're pretty much a separate chain, but oh yeah, we have a bridge, and ok fine let's put 1-2 devs to get it to stage 1 so the l2beat people will put a green checkmark on it so vitalik likes us").

The other extreme of "app chain", eg. the version where you convince some government registry, or social media platform, or gaming thing, to start putting merkle roots of its database, with STARKs that prove every update was authorized and signed and executed according to a pre-committed algorithm, onchain, is also reasonable - this is what makes the most sense to me in terms of "institutional L2s". It's obviously not Ethereum, not credibly neutral and not trustless - the operator can always just choose to say "we're switching to a different version with different rules now". But it would enable verifiable algorithmic transparency, a property that many of us would love to see in government, social media algorithms or wherever else, and it may enable economic activity that would otherwise not be possible.

I think if you're the first thing, it's valid and great to call yourself an Ethereum application - it can't survive without Ethereum even technologically, it maximizes interoperability and composability with other Ethereum applications.

If you're the second thing, then you're not Ethereum, but you are (i) bringing humanity more algorithmic transparency and trust minimization, so you're pursuing a similar vision, and (ii) depending on details probably synergistic with Ethereum. So you should just say those things directly!

Basically:

1. Do something that brings something actually new to the table.

2. Vibes should match substance - the degree of connection to Ethereum in your public image should reflect the degree of connection to Ethereum that your thing has in reality.

There have recently been some discussions on the ongoing role of L2s in the Ethereum ecosystem, especially in the face of two facts:

* L2s' progress to stage 2 (and, secondarily, on interop) has been far slower and more difficult than originally expected

* L1 itself is scaling, fees are very low, and gaslimits are projected to increase greatly in 2026

Both of these facts, for their own separate reasons, mean that the original vision of L2s and their role in Ethereum no longer makes sense, and we need a new path.

First, let us recap the original vision. Ethereum needs to scale. The definition of "Ethereum scaling" is the existence of large quantities of block space that is backed by the full faith and credit of Ethereum - that is, block space where, if you do things (including with ETH) inside that block space, your activities are guaranteed to be valid, uncensored, unreverted, untouched, as long as Ethereum itself functions. If you create a 10000 TPS EVM where its connection to L1 is mediated by a multisig bridge, then you are not scaling Ethereum.

This vision no longer makes sense. L1 does not need L2s to be "branded shards", because L1 is itself scaling. And L2s are not able or willing to satisfy the properties that a true "branded shard" would require. I've even seen at least one explicitly saying that they may never want to go beyond stage 1, not just for technical reasons around ZK-EVM safety, but also because their customers' regulatory needs require them to have ultimate control. This may be doing the right thing for your customers. But it should be obvious that if you are doing this, then you are not "scaling Ethereum" in the sense meant by the rollup-centric roadmap. But that's fine! it's fine because Ethereum itself is now scaling directly on L1, with large planned increases to its gas limit this year and the years ahead.

We should stop thinking about L2s as literally being "branded shards" of Ethereum, with the social status and responsibilities that this entails. Instead, we can think of L2s as being a full spectrum, which includes both chains backed by the full faith and credit of Ethereum with various unique properties (eg. not just EVM), as well as a whole array of options at different levels of connection to Ethereum, that each person (or bot) is free to care about or not care about depending on their needs.

What would I do today if I were an L2?

* Identify a value add other than "scaling". Examples: (i) non-EVM specialized features/VMs around privacy, (ii) efficiency specialized around a particular application, (iii) truly extreme levels of scaling that even a greatly expanded L1 will not do, (iv) a totally different design for non-financial applications, eg. social, identity, AI, (v) ultra-low-latency and other sequencing properties, (vi) maybe built-in oracles or decentralized dispute resolution or other "non-computationally-verifiable" features

* Be stage 1 at the minimum (otherwise you really are just a separate L1 with a bridge, and you should just call yourself that) if you're doing things with ETH or other ethereum-issued assets

* Support maximum interoperability with Ethereum, though this will differ for each one (eg. what if you're not EVM, or even not financial?)

From Ethereum's side, over the past few months I've become more convinced of the value of the native rollup precompile, particuarly once we have enshrined ZK-EVM proofs that we need anyway to scale L1. This is a precompile that verifies a ZK-EVM proof, and it's "part of Ethereum", so (i) it auto-upgrades along with Ethereum, and (ii) if the precompile has a bug, Ethereum will hard-fork to fix the bug.

The native rollup precompile would make full, security-council-free, EVM verification accessible. We should spend much more time working out how to design it in such a way that if your L2 is "EVM plus other stuff", then the native rollup precompile would verify the EVM, and you only have to bring your own prover for the "other stuff" (eg. Stylus). This might involve a canonical way of exposing a lookup table between contract call inputs and outputs, and letting you provide your own values to the lookup table (that you would prove separately).

This would make it easy to have safe, strong, trustless interoperability with Ethereum. It also enables synchronous composability (see: https://t.co/9jy6v1X6Fw and https://t.co/gZmu3YjebM ). And from there, it's each L2's choice exactly what they want to build. Don't just "extend L1", figure out something new to add.

This of course means that some will add things that are trust-dependent, or backdoored, or otherwise insecure; this is unavoidable in a permissionless ecosystem where developers have freedom. Our job should make to make it clear to users what guarantees they have, and to build up the strongest Ethereum that we can.

I actually don't think it's complicated.

IMO the future of onchain mechanism design is mostly going to fit into one pattern:

[something that looks like a prediction market] -> [something that looks like a capture-resistant, non-financialized preference-setting gadget]

In other words:

* One layer that is maximally open and maximizes accountability (it's a market, anyone can buy and sell, if you make good decisions you win money if you make bad decisions you lose money)

* One layer that is decentralized and pluralistic, and that maximizes space for intrinsic motivation. This cannot be token-based, because token owners are not pluralistic, and anyone can buy in and get 51% of them. Votes here should be anonymous, ideally MACI'd to reduce risk of collusion.

The prediction market is the correct way to do a "decentralized executive", because the most logical primitive for "accountability" in a permissionless concept is exactly that.

Though sometimes you will want to keep it simple, and do a centralized executive at that layer instead:

[replaceable centralized executive] -> [something that looks like a capture-resistant, non-financialized preference-setting gadget]

Thinking in these two layers explicitly: (i) what is doing your execution, (ii) what is doing your preference-setting and is judging the executor(s), is best.

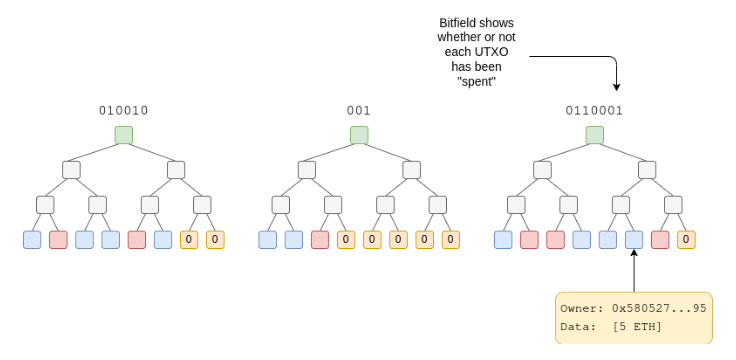

I no longer agree with this previous tweet of mine - since 2017, I have become a much more willing connoisseur of mountains. It's worth explaining why.

https://x.com/VitalikButerin/status/873177382164848641

First, the original context. That tweet was in a debate with Ian Grigg, who argued that blockchains should track the order of transactions, but not the state (eg. user balances, smart contract code and storage):

> The messages are logged, but the state (e.g., UTXO) is implied, which means it is constructed by the computer internally, and then (can be) thrown away.

I was heavily against this philosophy, because it would imply that users have no way to get the state other than either (i) running a node that processed every transaction in all of history, or (ii) trusting someone else.

In blockchains that commit to the state in the block header (like Ethereum), you can simply prove any value in the state with a Merkle branch. This is conditional on the honest majority assumption: if >= 50% of the consensus participants are honest, then the chain with the most PoW (or PoS) support will be valid, and so the state root will be correct.

Trusting an honest majority is far better than trusting a single RPC provider. Not trusting at all (by personally verifying every transaction in the chain) is theoretically ideal, but it's a computation load infeasible for regular users, unless we take the (even worse) tradeoff of keeping blockchain capacity so low that most people cannot even use the chain.

Now, what has changed since then?

The biggest thing is of course ZK-SNARKs. We now have a technology that lets you verify the correctness of the chain, without literally re-executing every transaction. WE INVENTED THE THING THAT GETS YOU THE BENEFITS WITHOUT THE COSTS! This is like if someone from the future teleported back into US healthcare debates in 2008, and demonstrated a clearly working pill that anyone could make for $15 that cured all diseases. Like, yes, if we have that pill, we should get the government fully out of healthcare, let people make the pill and sell it at Walgreens, and healthcare becomes super affordable so everyone is happy. ZK-SNARKs are literally like that but for the block size war. (With two asterisks for block building centralization and data bandwidth, but that's a separate topic)

With better technology, we should raise our expectations, and revisit tradeoffs that we made grudgingly in a previous era.

But also, I have actually changed my mind on some of the underlying issues. In 2017, I was thinking about blockchains in terms of academic assumptions - what is okay to rely on honest majority for, when we are ok with 1-of-N trust assumption, etc. If a construction gave better properties under known-acceptable assumptions, I would eagerly embrace it.

On a raw subconscious level, I don't think I was sufficiently appreciative of the fact that _in the real world, lots of things break_. Sometimes the p2p network goes down. Sometimes the p2p network has 20x the latency you expected - anyone who has played WoW can attest to long spans of time when the latency spiked up from its usual ~200ms to 1000-5000ms. Sometimes a third party service you've been relying on for years shuts down, and there isn't a good alternative. If the alternative is that you personally go through a github repo and figure out how to PERSONALLY RUN A SERVER, lots of people will give up and never figure it out and end up permanently losing access to their money. Sometimes mining or staking gets concentrated to the point where 51% attacks are very easy to imagine, and you almost have to game-theoretically analyze consensus security as though 75% of miners or stakers are controlled by one single agent. Sometimes, as we saw with tornado cash, intermediaries all start censoring some application, and your *only* option becomes to directly use the chain.

If we are making a self-sovereign blockchain to last through the ages, THE ANSWER TO THE ABOVE CONUNDRUMS CANNOT ALWAYS BE "CALL THE DEVS". If it is, the devs themselves become the point of centralization - they become DEVS in the ancient Roman sense, where the letter V was used to represent the U sound.

The Mountain Man's cabin is not meant as the replacement lifestyle for everyone. It is meant as the safe place to retreat to when things go wrong. It is also meant as the universal BATNA ("Best Alternative to a Negotiated Agreement") - the alternative option that improves your well-being not just in the case when you end up needing it, but also because knowledge of it existing motivates third parties to give you better terms. This is like how Bittorrent existing is an important check on the power of music and video streaming platforms, driving them to offer customers better terms.

We do not need to start living every day in the Mountain Man's cabin. But part of maintaining the infinite garden of Ethereum is certainly keeping the cabin well-maintained.

We need more DAOs - but different and better DAOs.

The original drive to build Ethereum was heavily inspired by decentralized autonomous organizations: systems of code and rules that lived on decentralized networks that could manage resources and direct activity, more efficiently and more robustly than traditional governments and corporations could.

Since then, the concept of DAOs has migrated to essentially referring to a treasury controlled by token holder voting - a design which "works", hence why it got copied so much, but a design which is inefficient, vulnerable to capture, and fails utterly at the goal of mitigating the weaknesses of human politics. As a result, many have become cynical about DAOs.

But we need DAOs.

* We need DAOs to create better oracles. Today, decentralized stablecoins, prediction markets, and other basic building blocks of defi are built on oracle designs that we are not satisfied with. If the oracle is token based, whales can manipulate the answer on a subjective issue and it becomes difficult to counteract them. Fundamentally, a token-based oracle cannot have a cost of attack higher than its market cap, which in turn means it cannot secure assets without extracting rent higher than the discount rate. And if the oracle uses human curation, then it's not very decentralized. The problem here is not greed. The problem is that we have bad oracle designs, we need better ones, and bootstrapping them is not just a technical problem but also a social problem.

* We need DAOs for onchain dispute resolution, a necessary component of many types of more advanced smart contract use cases (eg. insurance). This is the same type of problem as price oracles, but even more subjective, and so even harder to get right.

* We need DAOs to maintain lists. This includes: lists of applications known to be secure or not scams, lists of canonical interfaces, lists of token contract addresses, and much more.

* We need DAOs to get projects off the ground quickly. If you have a group of people, who all want something done and are willing to contribute some funds (perhaps in exchange for benefits), then how do you manage this, especially if the task is too short-duration for legal entities to be worth it?

* We need DAOs to do long-term project maintenance. If the original team of a project disappears, how can a community keep going, and how can new people coming in get the funding they need?

One framework that I use to analyze this is "convex vs concave" from https://t.co/1BrMsUAKWK . If the DAO is solving a concave problem, then it is in an environment where, if faced with two possible courses of action, a compromise is better than a coin flip. Hence, you want systems that maximize robustness by averaging (or rather, medianing) in input from many sources, and protect against capture and financial attacks. If the DAO is solving a convex problem, then you want the ability to make decisive choices and follow through on them. In this case, leaders can be good, and the job of the decentralized process should be to keep the leaders in check.

For all of this to work, we need to solve two problems: privacy, and decision fatigue. Without privacy, governance becomes a social game (see https://t.co/uMXcuzQNjM ). And if people have to make decisions every week, for the first month you see excited participation, but over time willingness to participate, and even to stay informed, declines.

I see modern technology as opening the door to a renaissance here. Specifically:

* ZK (and in some cases MPC/FHE, though these should be used only when ZK along cannot solve the problem) for privacy

* AI to solve decision fatigue

* Consensus-finding communication tools (like https://t.co/Nzord32Ub1, but going further)

AI must be used carefully: we must *not* put full-size deepseek (or worse, GPT 5.2) in charge of a DAO and call it a day. Rather, AI must be put in thoughtfully, as something that scales and enhances human intention and judgement, rather than replacing it. This could be done at DAO level (eg. see how https://t.co/pCHI7Mlo2m works), or at individual level (user-controlled local LLMs that vote on their behalf).

It is important to think about the "DAO stack" as also including the communication layer, hence the need for forums and platforms specially designed for the purpose. A multisig plus well-designed consensus-finding tools can easily beat idealized collusion-resistant quadratic funding plus crypto twitter.

But in all cases, we need new designs. Projects that need new oracles and want to build their own should see that as 50% of their job, not 10%.

Projects working on new governance designs should build with ZK and AI in mind, and they should treat the communication layer as 50% of their job, not 10%.

This is how we can ensure the decentralization and robustness of the Ethereum base layer also applies to the world that gets built on top.